The Ethics of AI: Navigating Bias, Transparency, and Accountability

-

Table of Contents

- Introduction

- Exploring the Impact of AI on Human Rights and Privacy

- Examining the Role of AI in Uncovering and Mitigating Unconscious Bias

- Investigating the Potential for AI to Discriminate Against Marginalized Groups

- Assessing the Need for Regulations to Protect Against AI-Based Discrimination

- Analyzing the Benefits and Risks of AI-Based Surveillance Systems

- Q&A

- Conclusion

“Unlock the Potential of AI: Empowering Ethical Solutions for a Better Future”

Introduction

The Ethics of AI is a rapidly growing field of research that focuses on the ethical implications of artificial intelligence (AI) technology. AI has the potential to revolutionize the way we live, work, and interact with each other, but it also raises a number of ethical concerns. These include issues of bias, privacy, and the potential for AI to be used for malicious purposes. This article will explore the ethical implications of AI, focusing on the issues of bias and privacy. It will discuss the potential for AI to be used to discriminate against certain groups, as well as the need for safeguards to protect user data and privacy. Finally, it will consider the implications of AI for the future of work and society.

Exploring the Impact of AI on Human Rights and Privacy

The rise of artificial intelligence (AI) has been a major development in the world of technology, and its impact on human rights and privacy is a topic of increasing concern. AI has the potential to revolutionize the way we live, work, and interact with one another, but it also raises important questions about the protection of our rights and privacy.

AI is already being used in a variety of ways, from facial recognition technology to automated decision-making systems. These technologies can be used to improve efficiency and accuracy, but they can also be used to monitor and control individuals. This raises serious questions about the potential for AI to be used to violate human rights and privacy.

One of the most pressing concerns is the potential for AI to be used to discriminate against certain groups of people. AI systems are often trained on data sets that contain biases, which can lead to discriminatory outcomes. For example, facial recognition technology has been found to be less accurate for people of color, and automated decision-making systems have been found to be biased against women and people of color.

Another concern is the potential for AI to be used to surveil individuals without their knowledge or consent. AI-powered surveillance systems can be used to track people’s movements, monitor their activities, and even predict their behavior. This raises serious questions about the right to privacy and the potential for AI to be used to violate it.

Finally, there is the potential for AI to be used to manipulate individuals and influence their behavior. AI-powered systems can be used to target individuals with personalized messages and content, which can be used to influence their opinions and decisions. This raises questions about the right to freedom of expression and the potential for AI to be used to manipulate individuals.

As AI continues to develop and become more pervasive, it is essential that we consider the potential implications for human rights and privacy. We must ensure that AI is used responsibly and that it does not lead to violations of our rights and privacy. We must also ensure that AI is used in a way that is transparent and accountable, so that individuals can understand how their data is being used and have a say in how it is used. Only then can we ensure that AI is used in a way that respects and protects our rights and privacy.

Examining the Role of AI in Uncovering and Mitigating Unconscious Bias

The use of artificial intelligence (AI) is becoming increasingly prevalent in the workplace, and it is important to consider the implications of this technology on the issue of unconscious bias. Unconscious bias is a form of discrimination that occurs when people make decisions or judgments based on their own preconceived notions and stereotypes. It can lead to a variety of negative outcomes, including the exclusion of certain groups from opportunities and resources.

AI has the potential to help uncover and mitigate unconscious bias in the workplace. AI algorithms can be used to analyze data and identify patterns that may indicate the presence of bias. For example, AI can be used to analyze job postings to identify language that may be exclusionary or discriminatory. AI can also be used to analyze hiring and promotion decisions to identify any potential disparities in outcomes.

In addition to uncovering unconscious bias, AI can also be used to mitigate it. AI algorithms can be used to create more equitable hiring and promotion processes. For example, AI can be used to remove any identifying information from job applications, such as names and addresses, to reduce the potential for bias. AI can also be used to create more objective criteria for evaluating job applicants, such as using standardized tests or structured interviews.

Ultimately, AI can be a powerful tool for uncovering and mitigating unconscious bias in the workplace. However, it is important to remember that AI is not a panacea. AI algorithms are only as good as the data they are trained on, and they can be subject to the same biases as humans. It is also important to remember that AI is not a substitute for human judgment. AI can be used to identify potential areas of bias, but it is up to humans to take action to address any issues that are uncovered.

By leveraging the power of AI, organizations can take steps to uncover and mitigate unconscious bias in the workplace. This can help create a more equitable and inclusive environment for all employees.

Investigating the Potential for AI to Discriminate Against Marginalized Groups

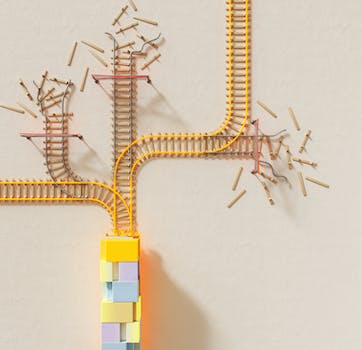

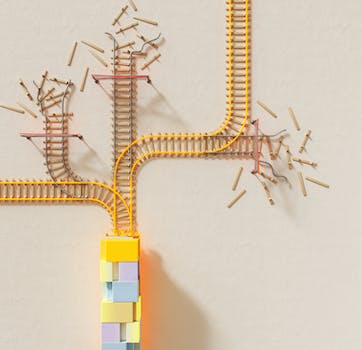

The potential for artificial intelligence (AI) to discriminate against marginalized groups is a growing concern in the tech industry. AI algorithms are increasingly being used to make decisions that affect people’s lives, from job applications to loan approvals. But if these algorithms are not designed and implemented with fairness and equity in mind, they can perpetuate existing biases and lead to discrimination against certain groups.

AI algorithms are trained on data sets that reflect the biases of the people who created them. If the data set is not representative of the population, the algorithm may learn to discriminate against certain groups. For example, if an algorithm is trained on a data set that is predominantly male, it may learn to favor male applicants over female applicants.

In addition, AI algorithms can be used to automate decisions that are traditionally made by humans. This can lead to a lack of transparency and accountability, as it is difficult to determine why a decision was made. This can be especially problematic when it comes to decisions that affect marginalized groups, as it can be difficult to identify and address any potential biases.

Finally, AI algorithms can be used to target certain groups with certain types of content. For example, an algorithm may be used to target certain groups with ads for products or services that are not beneficial to them. This can lead to further marginalization and exploitation of these groups.

It is important for tech companies to be aware of the potential for AI to discriminate against marginalized groups and take steps to ensure that their algorithms are designed and implemented with fairness and equity in mind. This includes using data sets that are representative of the population, providing transparency and accountability for decisions made by AI algorithms, and avoiding targeting certain groups with content that is not beneficial to them. By taking these steps, tech companies can help ensure that AI is used in a way that is equitable and beneficial to all.

Assessing the Need for Regulations to Protect Against AI-Based Discrimination

As artificial intelligence (AI) becomes increasingly prevalent in our lives, it is important to consider the potential for AI-based discrimination. AI systems are often designed to make decisions based on data, and if the data used to train the system is biased, the system can perpetuate discrimination. This is especially concerning when AI is used to make decisions that affect people’s lives, such as in hiring, loan applications, and criminal justice.

The potential for AI-based discrimination is a serious issue that needs to be addressed. AI systems can be designed to be fair and unbiased, but this requires careful consideration of the data used to train the system. If the data is biased, the system will be too. Additionally, AI systems can be designed to be transparent and explainable, so that people can understand why a decision was made.

Regulations are needed to protect against AI-based discrimination. These regulations should ensure that AI systems are designed to be fair and unbiased, and that they are transparent and explainable. Additionally, regulations should require companies to regularly audit their AI systems to ensure that they are not perpetuating discrimination.

AI-based discrimination is a serious issue that needs to be addressed. Regulations are needed to ensure that AI systems are designed to be fair and unbiased, and that they are transparent and explainable. Companies should also be required to regularly audit their AI systems to ensure that they are not perpetuating discrimination. It is important that we take steps to protect against AI-based discrimination now, before it becomes a bigger problem.

Analyzing the Benefits and Risks of AI-Based Surveillance Systems

AI-based surveillance systems are becoming increasingly popular in both public and private settings. These systems use artificial intelligence (AI) to monitor and analyze video footage, detect suspicious activity, and alert security personnel. While these systems offer many potential benefits, they also come with certain risks that must be considered.

The primary benefit of AI-based surveillance systems is their ability to detect suspicious activity more quickly and accurately than human security personnel. AI-based systems can be programmed to recognize certain behaviors or objects, such as a person carrying a weapon or a vehicle parked in an unauthorized area. This allows security personnel to respond more quickly to potential threats, potentially preventing serious incidents.

AI-based surveillance systems can also be used to monitor large areas more efficiently than human security personnel. By using AI-based systems, security personnel can monitor multiple areas simultaneously, allowing them to respond more quickly to potential threats.

However, AI-based surveillance systems also come with certain risks. One of the primary concerns is the potential for false positives. AI-based systems can be programmed to recognize certain behaviors or objects, but they can also mistakenly identify innocent activities as suspicious. This can lead to unnecessary alarm and disruption, as well as potential legal issues if innocent people are wrongly accused of criminal activity.

Another risk associated with AI-based surveillance systems is the potential for misuse. AI-based systems can be used to monitor people without their knowledge or consent, which can lead to privacy violations and other legal issues. Additionally, AI-based systems can be used to target certain groups of people, such as racial or religious minorities, which can lead to discrimination.

Finally, AI-based surveillance systems can be vulnerable to hacking and other cyberattacks. If a hacker is able to gain access to the system, they can potentially manipulate the data or use it for malicious purposes.

Overall, AI-based surveillance systems offer many potential benefits, but they also come with certain risks that must be considered. It is important to weigh the potential benefits and risks carefully before implementing an AI-based surveillance system.

Q&A

Q1: What is AI bias?

A1: AI bias is when an AI system produces results that are systematically prejudiced due to the data it was trained on, the algorithms it uses, or the people who designed it. This can lead to unfair outcomes for certain groups of people.

Q2: How can AI bias be addressed?

A2: AI bias can be addressed by using data sets that are representative of the population, using algorithms that are transparent and explainable, and by having a diverse team of people involved in the design and implementation of the AI system.

Q3: What is AI privacy?

A3: AI privacy is the protection of personal data from unauthorized access or use. This includes data collected by AI systems, such as facial recognition or voice recognition.

Q4: How can AI privacy be addressed?

A4: AI privacy can be addressed by implementing strong security measures, such as encryption and access control, and by ensuring that data is collected and used in a responsible manner.

Q5: What is the role of ethics in AI?

A5: The role of ethics in AI is to ensure that AI systems are designed and used in a way that is fair, responsible, and respectful of human rights. This includes addressing issues such as bias and privacy, as well as considering the potential impacts of AI on society.

Conclusion

The Ethics of AI is an important and complex issue that requires careful consideration. AI technology has the potential to revolutionize many aspects of our lives, but it also carries with it the potential for bias and privacy concerns. It is essential that we take steps to ensure that AI is used responsibly and ethically, and that we address any potential bias and privacy concerns. We must also ensure that AI is used in a way that respects the rights and dignity of all individuals. By doing so, we can ensure that AI is used for the benefit of all.